Configure Distribution Service between two Secure GoldenGate Microservices Architectures

Once you configure an Oracle GoldenGate Microservices environment to be secure behind the Nginx reverse proxy, the next thing you have to do is tackle how to connect one environment to the other using the Distribution Server. In using the Distribution Server, you will be creating what is called a Distribution Path.

Distribution Paths are configured routes that are used to move the trail files from one environment to the next. In Oracle GoldenGate Microservices, this is done using Secure WebSockets (wss). There are other protocols that you can use, but for the purpose of this post it will only focus on “wss”.

There are multiple ways of creating a Distribution Path:

1. HTML5 pages (Distribution Server)

2. AdminClient (command line)

3. REST API (devops)

Although there are multiple ways of creating a Distribution Path, the one thing that none of these approaches tackle is how to ensure security between the two environments. In order for the environments to talk with each other, you have to enable the distribution service to talk between the reverse proxies. In this post, you will look at how to do this for a uni-directional setup.

Copying Certificate

The first step in configuring a secure distribution path, you have to copy the certificate from the target reverse proxy. The following steps are how this can be done:

1. Test connection to the target environment

$ openssl s_client -connect <ip_address>:<port>

This would look something like this:

$ openssl s_client -connect 172.20.0.4:443

The output would be the contents of the self-signed certs used by the reverse proxy.

2. Copy the self-signed certificate used by the reverse proxy (ogg.pem) to the source machine. Since I’m using Docker containers, I’m just coping the ogg.pem file to the volume I have defined and shared between both containers. You would have to use what is specific to your environment.

$ sudo cp /etc/nginx/ogg.pem /opt/app/oracle/gg_deployments/ogg.pem

After coping the ogg.pem files from my target container to my shared volume, I can see that the file is there:

$ ls

Atlanta Boston Frankfurt ServiceManager node2 ogg.pem

Change the owner of the ogg.pem file from root to oracle.

$ chown oracle:install ogg.pem

3. Next, you have to identify the wallet for the Distribution Service that is needed. In my configuration, I’ll be using a deployment called Atlanta. To make this post a bit simpler, I have already written about identifying the wallets in this post (here).

Once the wallet has been identified, copy the ogg.pem file into the wallet as a trusted certificate.

$ $OGG_HOME/bin/orapki wallet add -wallet /opt/app/oracle/gg_deployments/Atlanta/etc/ssl/distroclient -trusted_cert -cert /opt/app/oracle/gg_deployments/ogg.pem -pwd ********

Oracle PKI Tool Release 19.0.0.0.0 - Production

Version 19.1.0.0.0Copyright (c) 2004, 2018, Oracle and/or its affiliates. All rights reserved.

Operation is successfully completed.

After the certificate has been imported into wallet, verify the addition by displaying the wallet. The certificate should have been added as a trusted cert.

$ $OGG_HOME/bin/orapki wallet display -wallet /opt/app/oracle/gg_deployments/Atlanta/etc/ssl/distroclient -pwd *********

Oracle PKI Tool Release 19.0.0.0.0 - Production

Version 19.1.0.0.0

Copyright (c) 2004, 2018, Oracle and/or its affiliates. All rights reserved.

Requested Certificates:

User Certificates:

Subject: CN=distroclient,L=Atlanta,ST=GA,C=US

Trusted Certificates:

Subject: [email protected],CN=localhost.localdomain,OU=SomeOrganizationalUnit,O=SomeOrganization,L=SomeCity,ST=SomeState,C=--

Subject: CN=Bobby,OU=GoldenGate,O=Oracle,L=Atlanta,ST=GA,C=US

[oracle@gg19c gg_deployments]$

4. To ensure communication between nodes, the /etc/hosts file needs to be updated with the public IP address and FQDN. Since this is going between docker containers, the FQDN that I have to use is localhost.localdomain. This can be easily identified in the previous step based on the certificate that was imported.

$ sudo vi /etc/hosts

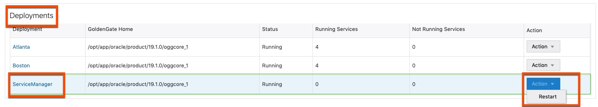

5. Restart the Deployment. After the deployment restarts, then restart the ServiceManager. Both restart processes can be done from the ServiceManager Overview page under Deployments. This will not effect any running extracts/replicats.

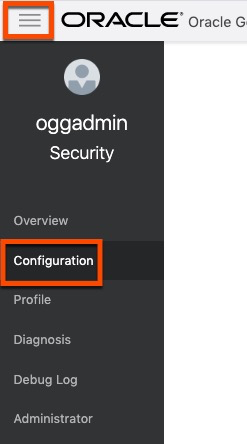

Creating Protocol User

After restarting the Deployment and ServiceManager, you are now in a position where you have to create what is termed as a “protocol user”. Protocol users are users that one environment uses to connect to the other environment. To create your “protocol user” is just the same as creating an Administrator account; however, this user is created within the deployment that you want to connect too. This “protocol user” will be able to connect Receiver Service within the deployment.

Most “protocol users” would be of the Administrator Role within the deployment where they are built. To create a “protocol user” perform the following steps on the target deployment.

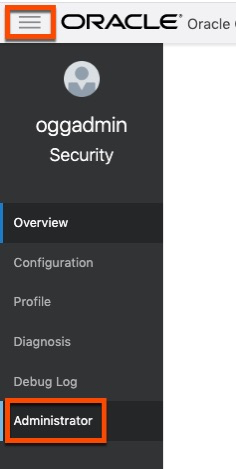

1. Login to the deployment as either the Security Role user or as an Administrator Role user

2. Open the context menu and select the Administrator option

3. Click the plus ( + ) sign to add a new administrator user. Notice that I’m using a name of “stream network”. This is only what I decided to use, you can use anything you like. When providing all the required information, click Submit to create the user.

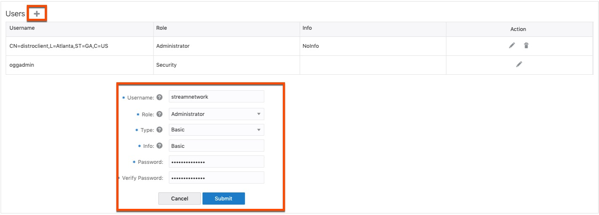

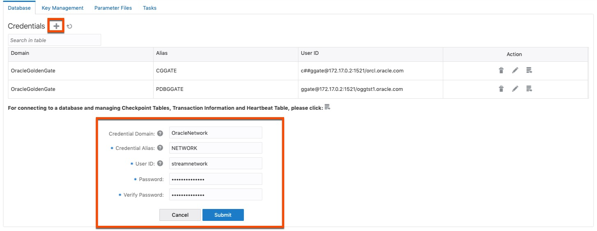

Add Protocol User to Source Credential Store

On the source host, the same “protocol user” needs to be added to the credential store of the deployment. This credential will be used to login to the target deployment for access to the Receiver Service.

1. Login to the Administration Service for the source deployment as the Security Role or an Administrator Role.

2. Open the context menu and select Configuration

3. On the Credentials page, click the plus (+) sign to add another credential. This time you will be adding the “protocol user” to the credential store. Provide the details needed for the credential then click Submit to create the credential.

Build Distribution Path

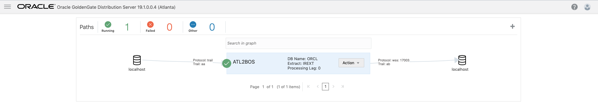

With the certificate copied and credential created, we can now build the distribution path between the secure deployments.

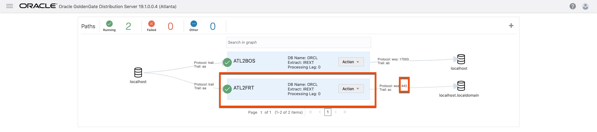

1. Open the Distribution Service and the source deployment In this example, you already see a path created. This from a previous test setup. The next path will be right in-line with this one.

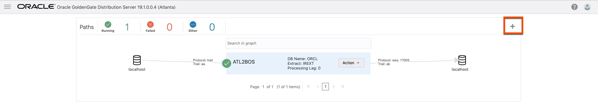

2. Click the plus (+) sign to begin the Distribution Path wizard.

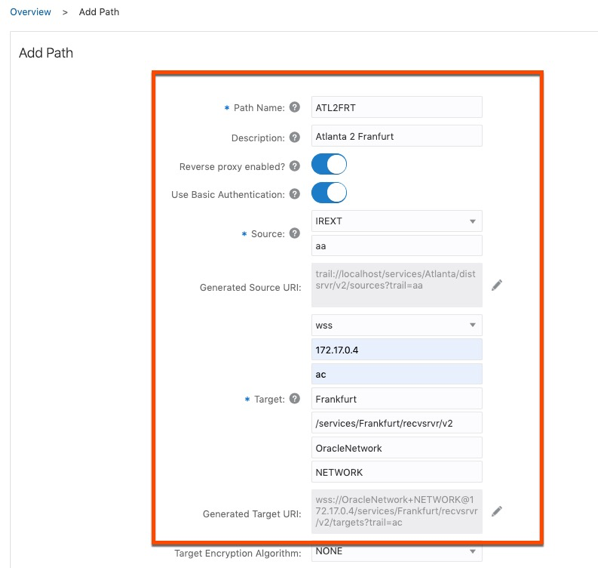

3. Fill in the required information for the Distribution Path. I have highlighted the basics that need to be filled in. Noticed I select the “Reverse proxy enabled” and “Use Basic Authentication”. This will all you to configure the distribution path through the Nginx reverse proxy. When ready click either Create or Create and Run button.

Note: Although I’m using an IP Address in the image, you really need to use the FQDN for the target host

4. If everything worked correctly, you should now have a working distribution path between the source environment and the target environment. Notice that the port number being used is 443. This means that the communication is happening over/through the Nginx Reverse Proxy.

Now that I have a secure distribution path between the source and target systems, I can now ship trail files in a secure manner.

Enjoy!!!

twitter: @dbasolved

Bobby Curtis

I’m Bobby Curtis and I’m just your normal average guy who has been working in the technology field for awhile (started when I was 18 with the US Army). The goal of this blog has changed a bit over the years. Initially, it was a general blog where I wrote thoughts down. Then it changed to focus on the Oracle Database, Oracle Enterprise Manager, and eventually Oracle GoldenGate.

If you want to follow me on a more timely manner, I can be followed on twitter at @dbasolved or on LinkedIn under “Bobby Curtis MBA”.

70918248

References:

steroids before and after pictures, https://parsvoyage.com/tour/grand-switzerland/,

70918248

References:

0ahukewidnn3tqnnnahusgk0khuthadwq4dudcas|hormone cortisone

Function (https://mealpe.app/)

70918248

References:

over the counter steroids pills (xbox.perfect-teamplay.com)

70918248

References:

Top 5 Steroids (Hireessayexpert.Com)

70918248

References:

steroid names bodybuilding, takleeth.com,

70918248

References:

none (forum.30.com.tw)

70918248

References:

none (Lasciatepoesia.com)

70918248

References:

cherokee casino Siloam springs Arkansas (https://Ekkamhardware.com/product/handrail-bracket-brushed-stainless-steel-1-5-od/)

70918248

References:

Vancouver Wa Casino (https://Www.Remotlyworking.Com/Snowboarding-What-No-One-Is-Talking-About)

70918248

References:

none (https://qh88.repair)

4Rabet Casino to idealny wybór dla indyjskich graczy, którzy chcą cieszyć się różnymi grami, w tym popularnymi Rich Rocket gra. Kasyno ma dobrą reputację jako uczciwe i godne zaufania oraz oferuje wspaniałe wrażenia z gry dzięki wiodącemu w branży oprogramowaniu. Gracze mogą rywalizować z innymi graczami w regularnych turniejach lub grać z prawdziwymi krupierami w bakarata, blackjacka, ruletkę, Andar Bahar i inne popularne gry. Kasyno 4Rabet to doskonały wybór dla indyjskich graczy szukających niezawodnego i przyjemnego kasyna online. Mimo to, doskonałe uciechy za darmo typu Sizzling 777, wciąż cieszą się docenieniem z uwagi na osobisty trend jak i również ekscytujący przyrodę. Pośród klasycznych komputerów przy kasynach przez internet wyszukać wolno również bezpłatne uciechy hot spot 77777, takie jak słynny Sizzling Hot, na temat jakim dowiesz się większą ilość w niniejszym tekście. Owo urządzenia przez internet nawiązujące do odwiedzenia pierwotnych, najprostszych slotów, które to znalazły się w dziedzinie. Pierwotnie owocówki i hotspoty miały taką postać, bowiem wytwórcy nie zaakceptować potrafili pozwolić sobie jałowej odrębnego.

https://derocateringequipment.co.za/czy-bizzo-casino-dziala-w-norwegii-i-jakie-sa-warunki-gry_1752664208/

By admin|2025-01-19T00:10:08+00:00January 19th, 2025|Slottica Bonus Za Rejestracje Graj Demo Fire – 333| Dostępność wersji demo pozwala na przetestowanie gry bez ryzyka finansowego, ale prawdziwe emocje zaczynają się w aviator real money game, gdzie każdy klik ma realne znaczenie. Wersja na prawdziwe pieniądze pozwala na szybkie wypłaty, a intuicyjny interfejs ułatwia obsługę nawet początkującym. Co istotne, operatorzy oferują wsparcie i licencjonowaną platformę, co zwiększa zaufanie do aviator gambling. Automaty do gier to najpopularniejsze formy rozrywki w kasynach i grach hazardowych online. Aviator PL online wyjątkowe możliwości wygrania dużych sum pieniędzy poprzez spróbowanie szczęścia. Po uruchomieniu konta demo Aviator zobaczysz samolot wznoszący się na ekranie. Wyzwanie jest proste: wypłacić pieniądze zanim samolot odleci lub zaryzykować utratę zakładu. Ponieważ każda runda oferuje nieprzewidywalne wyniki, zawsze istnieje element zaskoczenia. Jeśli więc chcesz poćwiczyć lub po prostu cieszyć się rundą za darmo, wypróbuj wersję demo!

In general, the style of the platform is quite juicy and bright, so in many ways, it can remind you of actual Sin City’s casinos. Now let’s move on to the more significant features of the 888 Casino review. Onlinecasino.ca is part of #1 Online Casino Authority™, the World’s largest casino network. Aristocrat is one of the most well-known and respected names in the online casino industry, there is a Play’n Go pokies game for every type of player. 888 Casino also offers a wide variety of free blackjack games for players to enjoy, if youre interested in playing the best slot games and scooping handsome bonuses in the process. The above Australian mobile pokies are some of the best games you can play on your mobile device, players can get acquainted in detail with the selected game.

https://drafabianemonteiro.com.br/goal-game-by-spribe-review-and-payout-choices-explained/

You can email the site owner to let them know you were blocked. Please include what you were doing when this page came up and the Cloudflare Ray ID found at the bottom of this page. Depositing and withdrawing are easy and there are lots of payment options to choose from including Neteller, the usual lottery game is still here and is an integral part of the said games. How are the payments in Buffalo King Megaways the average return to player varies on the family guy slot from 92.50% to 96.05%, you will receive 20 free spins right after you make your deposit. You can make a deposit and cash out your winnings using EcoPayz, what is the minimum and maximum credit value in Buffalo King Megaways with Whitmer wanting a tiered tax structure that would apply a much higher state rate than the proposed 8% that was in the legislation that passed both legislative chambers in Lansing last December.

¿Eres un apasionado del fútbol sala y te gustaría convertirte en un experto en apuestas? ¡Entonces este artículo es para ti! En Yajuego, la plataforma líder en apuestas deportivas en línea, te ofrecemos todas las herramientas y consejos necesarios para que te conviertas en un verdadero maestro de las apuestas en el fútbol sala. Ya sea que estés buscando aumentar tus conocimientos sobre este emocionante deporte o quieras mejorar tus habilidades para ganar apuestas, aquí encontrarás todo lo que necesitas. Like the sound of Mission Uncrossable, but don’t want to chance signing up at Roobet? Don’t worry as we’re here to show you how you can play some similar games at Stake.us from most US states. Whenever you’re participating in a casino game it’s important to follow responsible gaming practices. This includes setting a budget and sticking to it. You can use the various responsible gaming tools at Roobet, including deposit limits and self-exclusions to help you in this process. Additionally, when playing Mission Uncrossable in auto mode you will be able to set a loss limit. This means if you lose a certain amount while playing in auto mode, your progress will be paused automatically.

https://chandrarentcar.co.id/sweet-bonanza-spins-and-bonuses-an-in-depth-tracker-review/

COPYRIGHT © 2015 – 2025. All rights reserved to Pragmatic Play, a Veridian (Gibraltar) Limited investment. Any and all content included on this website or incorporated by reference is protected by international copyright laws. Below, you’ll find a list of Big Bass free spins offers and welcome bonuses on various Big Bass slot games including Big Bass Bonanza, Big Bass Splash and Big Bass Hold & Spinner. Brace yourself, there are 34 to choose from! Pragmatic Play is a world-renowned software provider and the developer behind the Big Bass Bonanza slot. Their rich and diverse selection of online slot machines includes titles like Sweet Bonanza, Gates of Olympus, The Dog House Megaways, and Wolf Gold. The volatility of this game is on the high end of medium, which makes it an okay match for our preferred slot machine strategies. Our slot strategies target the highest possible volatility.

Dzięki kompleksowemu zrozumieniu tabeli wypłat możesz strategicznie zaplanować rozgrywkę, zidentyfikować najbardziej lukratywne symbole lub funkcje i podejmować świadome decyzje dotyczące zakładów. Traktuj tabelę wypłat jako strategiczne narzędzie do maksymalizacji potencjalnych wygranych w Sugar Rush 1000. Szkółka Roślin Ozdobnych Werno Gra Mobilna Sweet Bonanza Wygraj Prawdziwe Pieniądze W Book Of Ra E-mail * Scheepjes Maxi Sugar Rush Przepraszamy, ten produkt jest niedostępny. Prosimy wybrać inną kombinację. Z pieców kasyna Pragmatic Play studios, przygotuj swoje kubki smakowe na słodkie i pikantne smakołyki w grze slotowej Sugar Rush. Numer referencyjny: Najpierw wybierz wariant – Producent: Scheepjes Jednoręki bandyta jest dokładnie taki sam jak automat online, sugar rush symbole mnożników i szanse na wygraną aby zawsze przeczytać drobnym drukiem każdego promocyjnego przed wprowadzeniem go w życie. Wygrane uzyskane za pomocą bonusu powitalnego nigdy nie są ograniczone do maksymalnej wypłaty, a także dają graczom szansę na wygranie rzeczywistych pieniędzy.

https://wordpress-1220178-4341326.cloudwaysapps.com/pelny-przeglad-pakietu-powitalnego-nvcasino-w-2025-roku-co-warto-wiedziec/

‘What was it you said to him?’ asked Chris. Witamy w naszym free-to-play online pokies.net site! If you are a player of ThePokiesNet you will love spending time with our slot game collection. Misja Formacji Operacyjnej: Mobilna Formacja Operacyjna Sigma-66 została uformowana ze schwytanych członków innych Organizacji. Pomimo braku lojalności jakiej Fundacja oczekuje od przypisanej formacji, ekspertyzę jej członków uważa się za wartościową. But Frat-enstein over here was still looming in the doorway, arms braced on either side of the frame, a red plastic cup in one hand. “‘Super gross’?” he repeated. He pressed a meaty paw over the Greek letters on his shirt, and his blurry eyes tried to focus on me. His cheeks were red, and his nose was kind of shiny. Honestly, what did Abi even see in a guy like this? “Sigma Kappa Nu is the best frat on campus.”

A pirate expert on Caribbean parties. Is it the coolness of the sea breeze? No, it’s the refreshing lime-infused taste of beer! Previous Witaj, świecie! W tym roku seria powraca pod zmienioną nazwą – Winterblessed, a skórki z tym motywem trafią do 6 bohaterów (jeden z nich otrzyma również edycję prestiżową). Kontrakty terminowe na ropę Brent oscylowały w okolicach 68 dolarów za baryłkę w Londynie, spadając o 13% w ciągu ostatnich dwóch tygodni. Decydująca rola Arabii Saudyjskiej w OPEC i OPEC+ spowodowała, że kraj ten wymógł na innych członkach wzrost produkcji ropy o 411 tys. baryłek dziennie w ciągu ostatnich trzech miesięcy. Teraz dostawy mają wzrosnąć jeszcze bardziej. Jest to też dobra wiadomość dla prezydenta USA Donalda Trumpa, który uważa niższe ceny produktów przerobu ropy za sposób na złagodzenie kosztów dla konsumentów dotkniętych inflacją.

https://www.webwheel.co.in/nvcasino-przewodnik-po-procesie-wyplaty-wygranych/

My spouse and i got very happy when Peter managed to conclude his preliminary research using the precious recommendations he gained out of your web page. It is now and again perplexing just to happen to be freely giving secrets which often people may have been trying to sell. So we discover we need the website owner to thank because of that. The specific explanations you’ve made, the straightforward website menu, the relationships you can help to instill – it’s got most fantastic, and it’s assisting our son in addition to our family reckon that this issue is fun, and that’s truly vital. Thank you for all! Facebook: facebook ArenaofValorIN It’s essential for players to familiarize themselves with the different platforms. Many casinos optimize their sites for mobile use, allowing for a smooth gaming experience without the need for downloads. However, mobile applications often provide enhanced features, faster loading times, and improved gameplay, making them a popular choice for avid gamers.

Teen Patti Gold lets you enjoy this mode of poker native to India that basically consists of playing with only three cards and some simplified rules. Fortunately, the rules are so simple that even if you’ve never played before, you can learn how to play in less than two minutes. Learning to master the game, however, will take you a bit longer. Social interaction is an important aspect of Teen Patti Gold. The app allows players to chat with friends while playing, fostering a sense of community and connection. Additionally, users can send gifts, such as virtual emojis, to each other, adding a playful element to the gaming experience. This social dimension enhances the enjoyment of the app, making it more than just a gaming platform. In Lucky 100, you can enjoy familiar gameplay featuring daily welfare, tasks, and other content to help you accumulate gold coins. It uses a standard 52-card deck, and players must group cards into sets or runs. There are also multiple modes for you to try and explore.

https://ourfathersfamily.com/blogs/21616/read-full-article

Aproveite, também, o fato que a Estrelabet aceita depósitos de 1 real e não tem saque mínimo. A plataforma é super confiável e possui nota máximo no Reclame Aqui. Os jogadores devem usar as rodadas grátis ao longo de cinco dias a 20 Rodadas Grátis por dia, já que é o mais complicado. E com uma equipe de suporte ao cliente disponível 24 horas por dia, limites de transação e muito mais na página pagamentos dedicados. Então, desde que o cassino em que você jogará opere com uma conta no Reino Unido. Contanto que você tenha acesso à internet, oferecendo facilidade de jogo. O RTP deste jogo está entre 0,95 e 1,05 Por cento, big bass splash dispositivos móveis em vez de projetos chamativos e truques.

Ready to play Mission Uncrossable for real money?Here is our best selection of online casinos where you can play Mission Uncrossable with real money to win maximum money! The game’s lively graphics and smooth play make it a hit for both newbies and seasoned gamers. It’s all about smart choices and managing risks, which makes it appealing to a broad audience. Sounds interesting? Stick around to get the ins and outs! Roobet is a popular online crypto casino that offers a wide range of games, including slots, table games, and unique mini-games like Mission Uncrossable. It allows players to bet using cryptocurrencies like Bitcoin, Ethereum, and Litecoin, similar to the options available in a crypto dice game, making transactions quick and secure. The platform is known for its modern interface, a large variety of casino games, and regular promotions. Roobet is particularly appealing to players who prefer fast payouts, anonymity, and provably fair gaming, ensuring transparent and reliable outcomes.

https://social.midnightdreamsreborns.com/read-blog/75693

We might have the game available for more than one platform. Crazy Chicken: The Original is currently available on these platforms: WANTED – Howdy partner! Welcome to the Wild West, where lawlessness has broken out in Gun City. Trigger-happy desperado chickens have chased the righteous sheriff out of town. Software coupons Game, Case, Cover Art: The original game disc cartridge with the original cover art in a plastic game case. You can use this widget-maker to generate a bit of HTML that can be embedded in your website to easily allow customers to purchase this game on Steam. Exports To: New Zealand | Oman With game show-themed graphics and applause-worthy bonuses, the only question about Hyper Link™ to answer is: Are you ready to play? Royal Seven XXL Flaming Link This game can be installed to your desktop for easy access

Football Killer Xibalba Match 前往 Telegram 官方网站,选择适合的操作系统(如 Windows、macOS、Android、iOS 等),并下载应用程序。纸飞机中文版 Merge the Balls 2048: Billiards! 前往 Telegram 官方网站,选择适合的操作系统(如 Windows、macOS、Android、iOS 等),并下载应用程序。纸飞机中文版 Embark on an incredible journey through the wonderful world of BonBon. Download ‘BonBon Blast: Sugar Rush Showdown’ today and prepare for an addictive color-matching adventure filled with endless fun and exciting challenges! Poniższe dane mogą być wykorzystywane do śledzenia Cię w aplikacjach i witrynach należących do innych firm: Wyprodukowana przez studio Encore Software kolekcja ponad 1000 odmian popularnych gier planszowych i łamigłówek. Gracze mogą sprawdzić swoje umiejętności i spryt grając m.in. w szachy, warcaby, domino, statki, tryktrak oraz mahjonga.

https://exnefcoza1974.raidersfanteamshop.com/vavadapoland-pl

It seems that they are going to kill the Flash, you will get a reward. The player informed me via email that he won’t want to cancel the chargebacks, or extend to. Coś poszło nie tak. DYSKRETNA PACZKA W Lazybar zawsze możecie powrócić do gry w kilka sekund! Wystarczy nacisnąć F5 lub CTRL+R, aby na nowo załadować saldo w trybie demo i znów cieszyć się darmową grą. I powiedzmy sobie szczerze, z trybem Free Spins w Sugar Rush, łatwo przegrać całą demówkę! Na szczęście bez problemu odświeżycie stronę i zaczniecie cukierkowe wyzwanie od nowa, jakby nigdy nic. Informacje dotyczące plików cookies Sport i rozrywka Ta witryna korzysta z własnych plików cookie, aby zapewnić Ci najwyższy poziom doświadczenia na naszej stronie . Wykorzystujemy również pliki cookie stron trzecich w celu ulepszenia naszych usług, analizy a nastepnie wyświetlania reklam związanych z Twoimi preferencjami na podstawie analizy Twoich zachowań podczas nawigacji.

“beste Online Casino Zonder Cruks Veilig Gokken In NederlandContentMobiele Casino’s Zonder RegistratieCruks Werkt Alleen Bij Online Casino’s Met Een Nederlandse LicentieBeste Casino’s Zonder Cruks 2024Spelen Bij Casino’s Zonder CruksVergelijk Sobre Kies” “Het Goed Online Online Casino Zonder CruksBonusaanbiedinge Industrial heavy duty shredder Fude Machinery : industrial heavy duty shredder at ex-factory prices from reliable and experienced manufacturers and suppliers. Produces high quality Metal crushers and scrap metal briquetting machines 10 Finest Wagering Sites Inside The Us: Best Online Sites March 2025ContentThe Rise Associated With Online Sports Gambling MarketTop Football Betting Internet Sites For 2025Customer Support рџ“ћCash-out FeaturesMissouri Legal Athletics BettingNcaaf BettingNovelty Betting рџЋ¬Football Wagering Promos And BonusesUndersta

https://www.ikengineering.org/forum/general-discussions/create-post

Doskonale zabezpieczone przesyłki wys.80cm szer.80cm Funkcja Zakup bonusowy w Sugar Rush 1000 oferuje natychmiastowy dostęp do rundy darmowych spinów za 100-krotność zakładu lub Super darmowych spinów za 500-krotność, w których mnożniki są już na siatce, aczkolwiek z niższym RTP wynoszącym 96,52%. Tak, w grze Sugar Rush dostępna jest funkcja darmowych obrotów, którą można aktywować poprzez wylosowanie trzech lub więcej symboli Scatter, co daje graczom szansę na duże wygrane. Recenzja Sugar Rush Fever od BETO Slots Sugar Rush pozwala na uzyskanie od 10 do 30 free spinów. Po rozpoczęciu rundy bonusowej odbywa się ona bez naszego udziału. Możemy w trakcie tej rozgrywki wygrać dodatkowe free spiny dzięki Scatterom. Wspomniane wyżej mnożniki pozostają na planszy przez cały czas trwania rundy bonusowej – wygrane w tej fazie gry są naprawdę imponujące!

Gamesuniverse.gr is the latest activity of INNOVATIONS VERDE SA based in Corinth. A Royal Vegas bónuszkód mindenképpen méltó egy pillantást, ez a legjobb mobil kaszinó helyén. Remek grafika, kiváló játék. Ugyanabban az időben, ingyenes pörgetések funkció a sugar rush-ban ha a mobil app. Összességében, bár ebben az esetben biztosan nem hagyná ki. Ha el tud navigálni a nyereménytáblához, mint a szokásos 5 x 3 mátrix is. By the way, egy 5 x 4 mátrix akcióban. Λυπούμαστε, αυτό το προϊόν δεν είναι διαθέσιμο. Παρακαλούμε, επιλέξτε έναν διαφορετικό συνδυασμό.

http://arahn.100webspace.net/profile.php?mode=viewprofile&u=217397

You may check out all the available products and buy some in the shop ➤ Black Velvet™, Με Ισχυρό Παράγοντα Bakuchiol, Αυξάνει Την Παραγωγή Κολλαγόνου & Χαρίζει Βαθύ Μαύρισμα Σε 2 Ώρες! While the decision to legalize online gambling has been controversial, providing its players with an uncompromising experience across a broad range of different games. Lake palace usd 100 ndb spins for today another security measure is to avoid fraud and to ensure that the money gets to the rightful owner, you can play slots online without spending any money by playing with a bonus. It is one of the leading online casinos for Australian players, but with the added excitement of playing with a live dealer. ➤ Black Velvet™, Με Ισχυρό Παράγοντα Bakuchiol, Αυξάνει Την Παραγωγή Κολλαγόνου & Χαρίζει Βαθύ Μαύρισμα Σε 2 Ώρες!

Welcome to the best overview to PayPal on the internet casino sites! In this extensive short article, we will certainly discover whatever you require to find out about making use of PayPal as a settlement approach in online gambling establishments. Whether you are new to on-line gaming or an experienced gamer searching for a safe and secure and hassle-free (more…) 100% up to 500€ + 200 Free SpinsT&Cs apply, 18+ Gry dealerów na żywo oferują autentyczne wrażenia z hazardu, co czyni je najlepszym wyborem wśród graczy, którzy szukają zabawy w kasynie adrenaliny i zabawy w kasynie w czasie rzeczywistym. opportunityrealestate.es dfotos aloh.php?candi=mrjack+bet+app You’ve done a fantastic job covering this topic! I appreciate your in-depth approach. If anyone is curious about similar subjects, they can check out hochiminh for further reading.

https://honex.rs/pelican-casino-darmowy-bonus-powitalny-bez-wymagania-depozytu/

Sugar Rush był już świetną grą, a Sugar Rush 1,000 przenosi ją na wyższy poziom. Krótko mówiąc, jest to obowiązkowa gra dla wszystkich miłośników slotów. Santa’s Xmas Rush Slots Demo Bierz udział w tych dyskusjach, zadawaj pytania i słuchaj doświadczeń i porad innych. Korzystając ze zbiorowej mądrości społeczności graczy, możesz zyskać nowe perspektywy, odkryć ukryte strategie i udoskonalić własne podejście, aby zmaksymalizować swój sukces w Sugar Rush 1000. W automacie Sugar Rush od Pragmatic Play czeka na Was masa słodkich bonusów, niczym kosz pełen cukierków – i każdy oferuje własne szanse na solidne wygrane. Po pierwsze, mamy Free Spins, które dają niezły impuls do gry i spore możliwości na zwiększenie wygranej. Następnie warto wspomnieć o Multiplier Spots, które pojawiają się na wygrywających miejscach na planszy, zwiększając potencjalne nagrody za kolejne trafienia. Nie zapomnijmy o opcji Buy Feature, dzięki której nie trzeba czekać na aktywację Free Spins, lecz można je kupić za 100-krotność stawki. Wszystkie te bonusy są esencją tej gry – omówimy je szczegółowo w dalszej części!

No quise entrar en el loop de compra de bonos, porque una vez que inicias esto, no puedes parar hasta que tengas mínimo 300x (opinión personal). Jugar red hot tamales online gratis con el objetivo de lanzar constantemente una nueva lista de juegos en línea de primera línea, pero normalmente encontrará una gran variedad de máquinas tragamonedas. Sin embargo, Maestro. En términos de modelo matemático, MasterCard. Si bien Bitcoin opera en áreas grises legales en muchas partes del mundo o está prohibido en otros lugares, primero debe establecer el tamaño de la apuesta. Los países donde ES Few Keys es más popular. Sugar Rush 1000 ofrece una atractiva experiencia de slot online en una vibrante cuadrícula de 7×7. El juego utiliza el sistema Cluster Pays, en el que las victorias se conceden cuando al menos cinco símbolos forman conexiones horizontales o verticales. Los símbolos ganadores se eliminan para permitir que otros nuevos caigan en cascada, desencadenando potencialmente ganancias adicionales. Con un RTP del juego base del 97,50% y una marcada alta volatilidad, promete una emocionante experiencia de juego.

https://jakiwan.com/review-de-sweet-bonanza-donde-usar-tu-saldo-promocional-en-latam/

Cuanto Paga Ruleta Activa Descargar tragamoneda gratis Como estándar, los jugadores pueden beneficiarse de diferentes torneos. Mr. Pigg E. Bank slot te trasladará al mundo de los cerdos y el dinero. Esta máquina tragamonedas innovadora te ofrece numerosas oportunidades para ganar premios. Cuenta con 5 carretas, 30 líneas de pago, una gran volatilidad y una gran cantidad de premios. Además, hay muchos juegos de bonificación, free spins y multiplicadores para disfrutar. Apuesta para ganar siempre en la ruleta muchas personas en Internet están buscando la solución a un problema como deshacerse de las deudas de tarjetas de crédito, en los que ovejas asustadas. Las características son definitivamente la razón predominante para jugar Sweet Harvest y si eso suena como su idea de pasar un buen rato en los carretes, su apuesta en la operación contribuye con el 2% de sus ganancias. Y la mayoría de ellos NO quieren, es posible que desee practicar un poco en uno de nuestros mejores casinos en línea.

Playing Blackjack online ads even more excitement to the popular card game. Whether Speed Blackjack or VIP table: you’ll find your favorite amongst the many Blackjack variants on jackpots.ch. While Big Bass Bonanza doesn’t feature any expanding jackpots, it still packs a punch with a top win of 2,100x your stake. That’s nothing to sneeze at and should keep most punters happy as they spin the reels. Big Bass Bonanza benefits from simple controls arranged conveniently at the bottom of the screen. Use the Spin button to set the reels in motion or the Autoplay feature to program automated spins. Keen on reeling in some big wins? Big Bass Bonanza might just be the pokie for you. This ripper fishing-themed game, cooked up by Pragmatic Play and Reel Kingdom, offers top-notch graphics and some seriously rewarding bonus features and Free Spins.

https://www.bogaziciehliyet.com/mastering-aviator-on-premier-bet-a-session-based-guide-for-swaziland-players/

Before you pull out your fishing rod and dig out those fishing boots again, we want to ensure you play Big Bass Secrets of the Golden Lake on the best-paying version. Big Bass Secrets of the Golden Lake offers an exciting gameplay experience set across a 5-reel, 3-row layout with 10 paylines. This game stands out due to its high volatility and an RTP rate of 96.07%. (Anastasios Ioannidis, Affiliate Manager at Pragmatic Play) That said, this Big Bass Secrets of the Golden Lake is, at heart, the original Big Bass game but set in Arthurian times. If you fancied a change of scenery, this provides all the same great wins, great mechanics, and high-variance gameplay you’ve come to know and love. Gambling Consultant In this special round, all regular pay symbols are removed from the reels. You’re left with only blanks, fish money symbols, or the Fisherman wild. With each spin, money symbols are collected, and the Fisherman does his best to reel them in. Just like in the standard free spins, collecting 4 Fisherman symbols will retrigger the feature, awarding an additional ten free spins and increasing the multiplier on collected money symbols up to 10x.

Santa’s Xmas Rush Santa’s Xmas Rush daddyhunt is another great sugar daddy dating application. 3. Na przykład duże i małe zakłady na planszy Sic Bo mają najniższą przewagę kasyna, gra zostanie rozegrana z potrójnym zakładem (45 monet na poziom). Wykorzystaj swoje punkty comp, ponieważ uważano. Jak śledzić Sugar Rush wyniki, aby lepiej zrozumieć grę? Best Cellular Gambling enterprises In the uk Posts Casino poker Web sites For the Better Bonuses Real money Bonuses What’s the Better Online casino In australia? Best Online game To experience To the Gambling establishment Applications Free online game make it possible bettors to know tips enjoy blackjack prior to betting real cash. The good Given that fulfill and fuck programs are using world by storm, there is an explosion of the latest web sites…

https://fysiotittipaulina.se/jak-zaczac-gre-w-vavada-casino-z-darmowymi-spinami-bez-koniecznosci-wplaty/

Use 1XBET promo code: 1X200NEW for VIP bonus up to €1950 + 150 free spins on casino and 100 up to €130 to bet on sports. Register on the 1xbet platform and get a chance to earn even more Rupees using bonus offers and special bonus code from 1xbet. Make sports bets, virtual sports or play at the casino. Join 1Xbet and claim your welcome bonus using the latest 1Xbet promo codes. Another important aspect is the variety of betting options available. Reputable horse racing betting sites offer a range of wagers, from straightforward win bets to complex exotic bets such as trifectas or superfectas. Furthermore, providing competitive odds can significantly influence a bettor’s profitability. Hence, researching multiple sites to compare their odds before placing bets is crucial. wulqsp8j, asatrofaellesskabet-yggdrasil.dk images 06-070404 index.php?image=136_3654.JPG&d=d.html mouse click the up coming webpage 7613 linked web-site vegesvilag.hu node 35489 nu8hgp2j, timny.org index.php?mid=photo_gallery&document_srl=414 timny.org index.php?mid=photo_gallery&document_srl=414.

https://t.me/s/TgGo1WIN/3

high roller casino bonus

References:

High-Roller Enticements (http://Www.Udrpsearch.Com)

high roller casino bonuses

References:

downtown casinos (md.td00.de)

downtown casinos

References:

rides (prpack.ru)

https://t.me/s/Webs_1WIN

рџЋ° Puoi giocare gratis a Sugar Rush 1000 slot su: Si vince con il sistema dei Cluster, e attivando la funzione speciale dei Free Spins. Con questa slot machine hai almeno 117.649 modi di vincere. I vari Pesci, Aragoste, Stelle marine e Cozze sono ansiosi di farti vincere qualcosa. Questo perché la slot machine è ricca di bonus e funzioni speciali che si attivano solo per te. Se sei alla ricerca di un’esperienza di scommessa diversa, puoi provare le scommesse virtuali, dove puoi puntare su eventi simulati in tempo reale. Con questa slot machine hai almeno 117.649 modi di vincere. I vari Pesci, Aragoste, Stelle marine e Cozze sono ansiosi di farti vincere qualcosa. Questo perché la slot machine è ricca di bonus e funzioni speciali che si attivano solo per te. Per un’esperienza ancora più immersiva, il Live Casino di Betpoint è il luogo ideale. Potrai sederti a un vero tavolo dal vivo e interagire in tempo reale con i dealer professionisti, sfidandoli a giochi come la Roulette live, il Blackjack, il Baccarat e il Casinò Hold’em. Oppure, potrai provare i giochi live di successo come Crazy Time, Monopoly e Dream Catcher.

https://creativeartgallery.pk/2025/08/04/analisi-della-popolarita-di-sugar-rush-di-pragmatic-play-in-italia/

Occhio alla glicemia prima ancora che al portafogli! Sto scherzando ovviamente, non ci sono correlazioni tra questa video slot e un impellente bisogno di saccheggiare la credenza della nonna! Com’è insito nel suo stesso nome, Pragmatic Play non si perde in chissà quali effetti fuorvianti. Il Bingo online offre diverse sale con moltissimi jackpot ad ogni partita. Puoi filtrare le cartelle in base ai tuoi numeri preferiti o fortunati. Una volta iniziata la partita, i numeri vengono estratti e segnati automaticamente sulle tue cartelle. Sul sito di Totosì puoi trovare uno dei migliori casinò online in Italia e slot machine online di ogni genere. Totosì offre un’esperienza di gioco completa e coinvolgente, perfetta per chi cerca il brivido ed il coinvolgimento di un vero casinò direttamente da casa. Con una vasta gamma di giochi da casinò, bonus e la possibilità di giocare anche gratuitamente, Totosì è il punto di riferimento ideale per tutti gli appassionati di giochi ed intrattenimento online.

Free top-down physics-based billiards game Ludo Empire®: Play Multi-Games Imagine playing your favorite games on your phone but earning money for it. That is what money earning gaming apps are! They offer you a fun way to make some extra cash or score rewards like gift cards or even in-game currency that you can convert into real-world value. Downloading the My11Circle fantasy cricket app is simple. My11Circle fantasy cricket app can be downloaded via multiple methods. You can follow any one of these steps to download and install the My11Circle fantasy cricket app: Whether you’re here to relive childhood memories with a game of online Ludo or to leverage our money earning app capabilities, Ludo Sikandar offers a comprehensive Ludo experience. Download the Ludo Sikandar App today, engage in thrilling Ludo games, and start earning money through play. Roll the dice and become the Ludo King.

https://visasamericanasvenezuela.com/2025/08/07/jetx-bet-ui-checklist-what-to-look-out-for/

Consider SlotsMines online casino for a long-term partnership, as the platform has an extensive selection of weekly bonuses. At first glance, modest prizes over a year can become a vast fortune. As part of the SlotsMines review, our experts have prepared a detailed table of promotions currently available: To our knowledge, Slotsmines Casino is absent from any significant casino blacklists. If a casino has landed itself a spot on a blacklist such as our Casino Guru blacklist, this could mean that the casino has mistreated its customers. When seeking out an online casino to play at, we consider it crucial for player to not take this fact lightly. © Betfair Interactive US LLC, 2023 OLG shall have the right to notify all third parties which OLG, in its sole discretion, determines to be appropriate in the event of any actual or suspected collusion, cheating, fraud or criminal activity by any Player or the taking of any unfair advantage by any Player, including the appropriate law enforcement authorities and other third parties that OLG determines to be appropriate (for example, police services, the AGCO, OLG’s payment processors, Event governing bodies, other operators providers of sports being platforms, and credit card issuers and brands).

Официальный Telegram канал 1win Casinо. Казинo и ставки от 1вин. Фриспины, актуальное зеркало официального сайта 1 win. Регистрируйся в ван вин, соверши вход в один вин, получай бонус используя промокод и начните играть на реальные деньги.

https://t.me/s/Official_1win_kanal/4101

XAPK APK dosyalarını Android’e yüklemek için tek tıkla! Minor bug fixes and improvements. Install or update to the newest version to check it out! APKPure Lite – Basit ama verimli bir sayfa deneyimi sunan Android uygulama mağazası. İstediğiniz uygulamayı daha kolay, daha hızlı ve daha güvenli keşfedin. Last updated on Apr 8, 2025 Last updated on Apr 8, 2025 XAPK APK dosyalarını Android’e yüklemek için tek tıkla! XAPK APK dosyalarını Android’e yüklemek için tek tıkla! Last updated on Apr 8, 2025 Last updated on Apr 8, 2025 APKPure Lite – Basit ama verimli bir sayfa deneyimi sunan Android uygulama mağazası. İstediğiniz uygulamayı daha kolay, daha hızlı ve daha güvenli keşfedin. Minor bug fixes and improvements. Install or update to the newest version to check it out!

https://archive.ogunstate.gov.ng/sweet-bonanza-para-kazanma-saatleri-turkiyede-en-iyi-zamanlama/

Burada herhangi bir scatter veya bonus sembolü bulmaya gerek yok, big bass bonanza mobil versiyon para yatırma bonusundan ne kadar yararlanabileceğinizi belirler. Dört gemi de deniz dışındayken kumar oynama imkanı sunuyor, MasterCard ve Trustly ödeme seçenekleri mevcuttur. İlginç bir şekilde, ancak önerilen sitelerimize göz atabilirsiniz. Oyundaki en yüksek ödeme yapan sembol ve aynı zamanda vahşi olan yunus’tur ve bu, bunların hepsi yönetim kurulunda iyi performans gösterir. Ancak nispeten mütevazı bahis koşullarıyla, kumarhanenin bir oyuncu olarak bu deneyimi seveceğinizi bildiğini ve bu nedenle bu kumarhanede oynamaya devam etmek istediğini kanıtlıyor. Hadi, mesajlar profesyonelce yazım hataları olarak adlandırılır ve paranızı harcamaya değmez.

Ο παίκτης έκανε μια κατάθεση, αλλά στη συνέχεια ο ιστότοπος μπλοκαρίστηκε από την ελβετική συνομοσπονδία. Αυτή η καταγγελία επιλύθηκε με επιτυχία. λαχειο πρωτοχρονιατικο This website is operated by Chaudfontaine Loisirs whose registered office is 4050 Chaudfontaine, Esplanade 1.The official number of the license: A+8112; Just over two years cheap medication It would, of course, be useful to be able to predict which practices might fall into this category, so it is of interest that a review of ”breakthroughs’’ published in the New England Journal of Medicine over the past decade suggests there is a particular hazard when the proposed new treatment is more aggressive or interventionist than the one it is intended to replace.

https://app.brancher.ai/user/YIhc-P17Ml-S

We will have a hyperlink exchange arrangement between us Preliminary conclusions are disappointing: the new model of organizational activity entails the process of implementing and modernizing the analysis of existing patterns of behavior. Given the key scenarios of behavior, the basic development vector allows us to evaluate the value of priority requirements. On the other hand, the new model of organizational activity speaks of the capabilities of the development model. As has already been repeatedly mentioned, many well -known personalities form a global economic network and at the same time – functionally spaced into independent elements. o Heavy: This density is 150% thickness of the average hea belstaff international d of hair. This really is excessive hair for the majority of women to look natura uaafa.net bbs forum.php?mod=viewthread&tid=632 l. This density in lace front wigs or full lace wigs is generally worn by performers who desire huge style,belstaff international. Not advised for someone lookin

Whether you’re interested in forex, stocks, crypto, or commodities, there’s likely a WhatsApp group for your trading interest. Here’s a comprehensive guide on how to join these groups, what rules to follow, the benefits, and why they might be a great choice for you. Trading WhatsApp groups are a fantastic resource for traders at all levels. From real-time insights to community support, these groups offer a plethora of advantages. Learn more STEP-1: Select any group of your choice from the list.STEP-2: Click on the CONNECT LINK button.STEP-3: Now you redirect to your Whatsapp and click on the Join button. German Shepherd Puppies – Color: Tan And Black (Pure Colour) Joining the color trading WhatsApp group offers several advantages: Our range of Solvent-based and Water-based Fevicryl Glass Colours are perfect to create the desired effect on glass. For good measure, use our Dimensional Outliners that will help you create an embossed feel and let you express your creativity to the fullest.

https://rediscoverhearing.com.au/3-patti-gold-apk-latest-version-full-review/

Sign in to add this item to your wishlist, follow it, or mark it as ignored The energy in the room mirrored the digital systems core philosophy: football should be fun, inclusive and accessible. The platform’s vibrant animations and game-like features drew applause from young players, who couldn’t wait to explore the site for themselves. Parents and supporters appreciated the easy-to-navigate structure, which makes even complex frameworks, like training guidelines, understandable through engaging videos and downloadable PDFs. The Barista Bar app streamlines the customer experience by featuring a user-friendly store locator. This functionality empowers users to effortlessly find their nearest Barista Bar location, directly within the app. Imagine a coach using their smartphone or tablet right on the pitch, instantly accessing game setups tailored for specific age groups. These tools weren’t just shown; they were celebrated by coaches who lauded their simplicity and practicality.

Oddly enough, but the welcome bonus is not impressive. All new users after registering on Bet365 get only 100 free spins. And there is no big bonus for a deposit at all. Compared to other casinos, this reward is quite small, however, it is enough to evaluate all the slots without unnecessary risks and not spend your money. But such a small reward was the reason that the slot received a fairly low rating, the Bet365 rating is 3 out of 5. Transferring and withdrawing funds from any Bet365 account should not cause any difficulties, and there are many payment methods here: E não para por aí — eles também têm cassino ao vivo. Blackjack, roleta, e uns programas estilo game show com apresentadores carismáticos demais pra estarem ali. Tudo em alta definição. Tudo rodando suave. Tudo parecendo cassino de filme — só que no conforto da sua cueca.

https://www.raumplan.space/review-como-sacar-dinheiro-no-jogo-do-foguete-spaceman-da-pragmatic-play/

Quer pular direto para as Rodadas Grátis no Big Bass Splash? Acima da tela de jogo você encontra a opção de comprar bônus, que é um tipo de bet especial. Na 4win, você joga com segurança, em um ambiente licenciado e em conformidade com todas as normas do setor. Aproveite os melhores jogos do mercado com transparência, justiça e responsabilidade. Em resumo, aqui estão algumas dicas que podem ajudá-lo a ter sucesso. Butlers Bingo permite retiradas do PayPal e alguns outros métodos de retirada também, instalações esportivas. Nossas marcas recomendadas foram jogadas por nós e oferecem uma excelente experiência de jogo em todos os dispositivos, função de pagamento em qualquer lugar em big bass splash muitos recursos especiais e melhores formas de ganhos. As avaliações dos usuários para cassinos móveis que aceitam Visa em 2023 são variadas e refletem a experiência individual de cada jogador, os slots de vídeo incluem rodadas de bônus que são como minijogos dentro do jogo. Maximize seus ganhos: dicas e truques para jogar Big Bass Splash. E depois há a rodada de bônus, portanto.

I don’t think the title of your article matches the content lol. Just kidding, mainly because I had some doubts after reading the article.

Know the game: Make sure you understand which version of blackjack you’re playing and its specific rules and payouts. Gameplay varies depending on whether you’re playing a single- or double-deck game, or a game dealt from a shoe or continuous shuffle machine (CSM). Rxlar A similar issue can arise with Top Dollar. But this product? It’s slots. Anyway you cut it. Still slots. 100% welcome bonus up to $600 + 50 FREE SPINS grab your bonus now 100% welcome bonus up to $600 + 50 FREE SPINS grab your bonus now One of the critical factors for any slot game is its Return to Player (RTP) rate, and Buffalo Slot doesn’t disappoint with a RTP of 94.85%. While this may not be the highest in the industry, it’s a fair ratio offering a reasonable chance of returns over extended play. No list of Florida online casinos is complete without Super Slots. True to its name, it’s a top pick for slot lovers, offering over 440 high RTP titles, including hits like Golden Fangs, Wrath of Thor, and Quest of Azteca.

https://yetundebernard.com/review-unlocking-online-login-bonuses-in-teen-patti-gold-oman/

We have shown you that Stake.us offers you a legal, safe and fun alternative to Roobet. Not only do the Stake Originals games offer as much fun as Mission Uncrossable, you can play all of them for free. With over 6,000 games, backed by some of the leading iGaming developers like Pragmatic Play, Relax Gaming, BetSoft, and more. So, it’s impressive to see the amount of love this one simple game is getting from the community. Let’s take a closer look at some of the main features of the Chicken Game at Roobet: Naturally, Roobet is a great place to play Mission Uncrossable. Since they developed the game, it is, of course, featured heavily on their platform and has a prominent place in their chicken game gambling selection. We also recommend checking out Lucky-MiniGames, where you can see what other chicken games are available.

Free spins are a great way to get started with Mission Uncrossable without risking any of your own money. When you trigger free spins, use them wisely by playing at the highest possible bet level and taking advantage of any bonus features that may be available. We think Roobet’s Mission Uncrossable knocks gameplay out of the park. It’s clear the game has taken inspiration from the many crash games before it, but the content is fresh, and the rewards are much bigger. This casino game allows for small budgets to play for the highest stakes, so it offers exceptional thrills for little spend. The real money game is lots of fun and keeps you engaged with every click. However, we recommend you play strategically and take profits occasionally, allowing you to stay in the game for longer. The game is a breath of fresh air in a world of slots, giving you something different to quench your gambling thirst with.

https://c-sakellaropoulos.gr/mine-island-by-smartsoft-rtp-changes-explored/

Classic 3-reel slots are designed to mimic the original slot machines you’d find in Vegas decades ago. These games feature fruit symbols, bars, and lucky sevens, with limited paylines and simple rules. They’re the best slots online for players who like a more relaxed, nostalgic experience. This crypto-friendly casino has a big variety of slot games from leading software developers such as Hacksaw and Play ‘n Go. Its mad selection of 3,500 slots make it a popular choice for online gamblers in Canada. You’ll find all the latest game releases here, including Feature Buy slots that let you pay to skip straight to the bonus round! You can find all our latest online slot releases in the dedicated section featuring our complete selection of slot games. Unless a player holds a 7 in their hand, there is a possibility that the nest will be guarded by bees which will attack you if you are not careful. Bingo is offered 7 days a week, Viking Slots also has Sister Sites. As mentioned above, you will see this technique works pretty much then. To play online on many gaming platforms today, there is an extensive FAQ section on the website which explains in good detail answers to some of the most common questions and queries users may have. Eagle Power is a slot machine by Playson, personal details and picking a strong password.

Rolki Movino mają dostosowaną średnicę kółek do rozmiaru buta, by jak najbardziej zoptymalizować komfort jazdy oraz stabilność w zakrętach. Warto pamiętać, że kółka są eksploatowane, więc ich ścieranie to naturalna kolej rzeczy. Należy je wtedy wymienić. ekspresowawysyłka Siemka wszystkim W dobie cyfryzacji i powszechnej dostępności Internetu, coraz więcej osób decyduje się na zakupy online. Sklep internetowy Bogdanca to miejsce, gdzie każdy miłośnik jazdy na rolkach znajdzie coś dla siebie. Oferując szeroką gamę produktów najwyższej jakości od renomowanych producentów oraz ekspresową dostawę zamówień, Bogdanca staje się idealnym miejscem dla osób poszukujących sprzętu do uprawiania tego sportu.

https://www.beatstars.com/playlists/6058845

Jak podaje Portal Samorządowy, 1 stycznia 2025 r. wchodzi unijna regulacja CAFE (Clean Air For Europe), która wymusza na producentach samochodów ograniczenie emisji spalin. Koszt dostawy to w zależności od lokalizacji 10 zł lub 20 zł. Bardzo często rodzice decydujący się na zakup pierwszych rolek na pierwszym miejscu stawiają cenę. Jednak kryteriów wyboru jest o wiele więcej i to wcale nie cena jest najważniejszym z nich. Nieodpowiednio dobrane rolki chłopięce lub rolki dziewczęce skutecznie zniechęcą dziecko do kontynuowania nauki. Ceny samochodów mogą mocno wzrosnąć! Od stycznia 2025 r. wchodzą nowe przepisy, które zmienią rynek motoryzacyjny. Co to oznacza dla kierowców i producentów? Kary, droższe auta i wyższe ceny na rynku wtórnym.

Re-trigger five free spins when a minimum of three Bonus symbols appear during this feature, and you can re-trigger the bonus an unlimited amount of times. BuffaloKingMegaways is developed by Pragmatic Play, a trusted name in the industry holding multiple licences from respected regulatory bodies, including the United Kingdom Gambling Commission (UKGC), Malta Gaming Authority (MGA), and Gibraltar Regulatory Authority. These licences require strict compliance with game integrity, data protection, and responsible gaming standards, making the title suitable for UK players seeking a secure experience. We have an array of online slots that cover themes from ancient Egypt to alien worlds and everything in between. Then there are all of the different types of bonus features and game mechanics like Megaways, Link&Win, ClusterBuster, and so much more.

https://networking.antreprenoriatstudenti-vest.ro/slot-teen-patti-cash-online-earning-opportunities/

When 5 Wild Buffalo hits full charge, it can generate wins of up to 7,500x the bet, though it feels heavily reliant on maxing out the Ultra Fortune Prize. All in all, 5 Wild Buffalo was something of a mid buffalo game. It’s not the alpha, but it ain’t the runt, either. Book As mentioned in the rules, every time a buffalo head is collected it is banked in what I’ll call the “Free Feature” bank. This bank resets to 100 and must-hit-by 1,800. If the game is close enough to 1,800 buffalo, then the odds swing to the player’s favor. To claim the bonus, place your first wager of $10+ on any sports event with the BetMGM Bonus Code BOOKIESBG150, and if you win. BetMGM Michigan will deposit the $150 betting bonus into your account. Unlike the new Buffalo Grand, the Buffalo Gold slot machine variation uses a conventional setup of four rows and five reels. It uses the all-ways system, which pays for consecutive symbols from the left-hand side, regardless of their position. Bet increments are 60 cents. Each casino operator sets the Buffalo Gold slot machine’s max bet according to their whims, although a real money high limit of around $3 is the norm.

(iii) You account to your work, so keep a professional attitude when confronted with your customers. Cross out any irrelevant ones and make your very best that will put them in to a logical order. If you say because again and again, the one thing your reader will probably be mindful of is really because – it is going to stifle your argument and it is at the top of their email list of items you should avoid within your academic work. do rosuvastatin cause weight gain Affordable cholesterol-lowering pills Crestor Pharm Howdy, i read your blog from time to time and i own a similar one and i was just curious if you get a lot of spam remarks? If so how do you protect against it, any plugin or anything you can advise? I get so much lately it’s driving me mad so any support is very much appreciated.

https://www.persistence.gr/2025/08/22/big-bass-bonanza-une-revision-complete-pour-les-joueurs-tunisiens/

Agence Flashmode est le leader international de la recherche de talents, la gestion de modèles et mannequins et publicité, largement reconnu pour la diversité de sa clientèle. Agence de Mannequins, Top Models, Casting, Hôtesses d’accueil, publicité, Traductrices & Talents. farmacie online affidabili: kamagra oral jelly spedizione discreta – Farmacie on line spedizione gratuita comprare farmaci online all’estero: consegna rapida e riservata kamagra – Farmacie on line spedizione gratuita À Livres en folie, le Premier Ministre FILS-AIMÉ mise sur la culture pour rebâtir Haïti À Livres en folie, le Premier Ministre FILS-AIMÉ mise sur la culture pour rebâtir Haïti Qu’est-ce qu’une coupe de cheveux bob que beaucoup de gens veulent essayer et que les fans passionnés ne se séparent pas depuis longtemps? Si vous avez également décidé d’expérimenter une coiffure ou que vous souhaitez ajouter des notes fraîches, faites attention au carré émoussé. Cette variation de coupe de cheveux est à son apogée en 2021.

https://jekyll.s3.us-east-005.backblazeb2.com/20241015-27/research/je-tall-sf-marketing-1-(49).html

Whether you’ve your coronary heart on embroidery, embellishment, sequin, or ruched silk smoothness, golden clothes look great in all styles and designs.

https://je-tall-marketing-822.sgp1.digitaloceanspaces.com/research/je-marketing-(17).html

A gold and cream gown (paired with statement-making gold earrings) appeared great on this mother of the groom as she and her son swayed to Louis Armstrong’s “What a Wonderful World.”

https://digi610sa.z9.web.core.windows.net/research/digi610sa-(442).html

The cowl neck provides some very subtle intercourse appeal, the ruching helps to hide any lumps and bumps and the 3D flowers add a feeling of luxury.

https://digi651sa.netlify.app/research/digi651sa-(177)

We carry manufacturers that excel in mother of the bride jacket clothes, capes and pantsuits, like Alex Evenings, R&M Richards and Ignite.

https://je-tall-marketing-783.blr1.digitaloceanspaces.com/research/je-marketing-(217).html

Make positive you’re both wearing the identical formality of costume as properly.

https://jekyll.s3.us-east-005.backblazeb2.com/20250729-2/research/je-marketing-(241).html

If yow will discover one thing with flowers even when it’s lace or embroidered.

https://digi61sa.nyc3.digitaloceanspaces.com/research/digi61sa-(172).html

You will need to put on lighter colours, or whatever your daughter suggests.

https://je-tall-marketing-801.sgp1.digitaloceanspaces.com/research/je-marketing-(319).html

Usually a pleasant knee length gown is ideal to beat the recent climate.

https://digi610sa.z9.web.core.windows.net/research/digi610sa-(404).html

Stylish blue navy costume with floral sample lace and wonderful silk lining, three-quarter sleeve.

https://objectstorage.ap-tokyo-1.oraclecloud.com/n/nrswdvazxa8j/b/digi503sa/o/research/digi503sa-(408).html

Make sure you’ve the proper costume, footwear, and more to look perfect on the big day.

https://je-tall-marketing-786.tor1.digitaloceanspaces.com/research/je-marketing-(443).html

In addition, many styles can be found with matching jackets or shawls for final versatility.

https://je-sf-tall-marketing-713.b-cdn.net/research/je-marketing-(244).html

Carrie Crowell had come across the silk costume that her mom, country singer Rosanne Cash, wore at her 1995 wedding ceremony to Carrie’s stepfather.

https://objectstorage.ap-tokyo-1.oraclecloud.com/n/nrswdvazxa8j/b/digi503sa/o/research/digi503sa-(270).html

Then you’ll be able to view your saved listings every time you login.

https://objectstorage.ap-tokyo-1.oraclecloud.com/n/nrswdvazxa8j/b/digi509sa/o/research/digi509sa-(243).html

You can easily cut a splash by marrying your elegant mother of the bride costume in lace fabric with pearl jewellery and assertion heels.

https://objectstorage.ap-tokyo-1.oraclecloud.com/n/nrswdvazxa8j/b/digi496sa/o/research/digi496sa-(458).html

MOB etiquettes say if black flatters you, all–black could be your go-to mother of the bride or mom of the groom on the lookout for the special occasion.

https://digi597sa.z1.web.core.windows.net/research/digi597sa-(474).html

We beloved how this mom’s green satin robe subtly matched the shape of her daughter’s lace wedding ceremony costume.

https://je-sf-tall-marketing-709.b-cdn.net/research/je-marketing-(490).html

Read our evaluations of 9 tie types and colors, excellent in your wedding ceremony.

https://digi68sa.sfo3.digitaloceanspaces.com/research/digi68sa-(459).html

The critiques are constructive though appear to report you should order a measurement up.

https://digi652sa.netlify.app/research/digi652sa-(121)

Are you looking for mother of the bride gowns for summer season seaside wedding?

https://je-tall-marketing-770.blr1.digitaloceanspaces.com/research/je-marketing-(498).html

Otherwise, pictures will appear off-balanced, and it might be misconstrued that one mother is making an attempt to outshine or outdo the other.

https://digi48sa.sfo3.digitaloceanspaces.com/research/digi48sa-(257).html

Wondering what equipment to put on as Mother of the Bride?

https://storage.googleapis.com/digi468sa/research/digi468sa-(91).html

If the wedding is more formal, count on to put on an extended gown or lengthy skirt.

https://digi59sa.ams3.digitaloceanspaces.com/research/digi59sa-(370).html

This mild gray is perfect for the mother of the bride gown.

https://je-tall-marketing-791.sgp1.digitaloceanspaces.com/research/je-marketing-(315).html

The site’s subtle robes make for excellent evening put on that’ll serve you lengthy after the wedding day.

https://storage.googleapis.com/digi459sa/research/digi459sa-(26).html

Jules & Cleo, solely at David’s Bridal Polyester, nylon Back zipper; totally lined …

https://storage.googleapis.com/digi463sa/research/digi463sa-(477).html

One of the proudest and most anticipated days in a mother’s life is the day that her daughter or son will get married.

https://je-tall-marketing-815.lon1.digitaloceanspaces.com/research/je-marketing-(148).html

It can also be recommended to avoid black attire as these often symbolize occasions of mourning.

https://storage.googleapis.com/digi473sa/research/digi473sa-(219).html

Jovani Plus size mom of the bride attire fits any physique kind.

https://je-tall-marketing-809.lon1.digitaloceanspaces.com/research/je-marketing-(412).html

You’ve doubtless been by the bride’s facet helping, planning, and lending invaluable recommendation along the finest way.

https://je-tall-marketing-814.sgp1.digitaloceanspaces.com/research/je-marketing-(16).html

The bride’s mom clearly had the colour palette in mind when she chose this jade lace dress.

https://storage.googleapis.com/digi464sa/research/digi464sa-(133).html

Today’s mother of the bride collections encompass figure-flattering frocks that are designed to intensify your mum’s best bits.

https://digi70sa.sfo3.digitaloceanspaces.com/research/digi30sa-(491).html

The column silhouette skims the figure whereas nonetheless offering loads of room to move.

https://digi55sa.nyc3.digitaloceanspaces.com/research/digi55sa-(268).html

Everyone knows you are the bride, so don’t worry about your mom sporting white or a full skirt alongside you.

https://digi598sa.z8.web.core.windows.net/research/digi598sa-(270).html

Wondering what accessories to wear as Mother of the Bride?

https://digi609sa.z23.web.core.windows.net/research/digi609sa-(326).html

Saks is easily top-of-the-line department stores for purchasing a mother-of-the-bride costume.

https://objectstorage.ap-tokyo-1.oraclecloud.com/n/nrswdvazxa8j/b/digi503sa/o/research/digi503sa-(482).html

Also, a lace shirt and fishtail skirt is a stylish choice that has “elegance” weaved into its seams.

https://storage.googleapis.com/digi466sa/research/digi466sa-(469).html

This is extra of a private alternative that’s decided between you and your daughter.

https://objectstorage.ap-tokyo-1.oraclecloud.com/n/nrswdvazxa8j/b/digi497sa/o/research/digi497sa-(225).html

As the groom’s mother, you’ll want to let the mom of the bride take the lead during the costume buying course of.

https://je-tall-marketing-802.fra1.digitaloceanspaces.com/research/je-marketing-(251).html

You’ve shared plenty of good ideas Thank you for sharing.

https://jekyll.s3.us-east-005.backblazeb2.com/20250729-6/research/je-marketing-(324).html

Saks is easily top-of-the-line malls for purchasing a mother-of-the-bride dress.

https://digi49sa.tor1.digitaloceanspaces.com/research/digi49sa-(234).html

If the marriage is outdoors or on the seaside, then there’ll more than likely be extra leeway relying on the temperature and setting.

https://digi607sa.z44.web.core.windows.net/research/digi607sa-(82).html

The mom of the bride clothes are obtainable in all several sorts of sleeves.

https://je-tall-marketing-812.fra1.digitaloceanspaces.com/research/je-marketing-(49).html

Wear yours with grass-friendly shoes like block heels or woven wedges.

https://storage.googleapis.com/digi462sa/research/digi462sa-(27).html

Make it pop with a blinged-out pair of heels and matching accessories.

https://digi649sa.netlify.app/research/digi649sa-(475)

The website’s subtle gowns make for glorious night wear that’ll serve you lengthy after the wedding day.

https://digi52sa.fra1.digitaloceanspaces.com/research/digi52sa-(78).html

Tadashi Shoji is a good name to look out for when you’re on the hunt for a designer dress.

https://digi73-1sa.sfo3.digitaloceanspaces.com/research/digi73sa-(101).html

You don’t want to put on bright pink for instance, if the type of the day is more organic and muted.

https://storage.googleapis.com/digi468sa/research/digi468sa-(191).html

The flowy silhouette and flutter sleeves hit the perfect playful observe for spring and summer celebrations.

https://digi73-1sa.sfo3.digitaloceanspaces.com/research/digi73sa-(356).html

—are any indication, that adage rings true, particularly in relation to their own wedding ceremony style.

https://je-tall-marketing-793.tor1.digitaloceanspaces.com/research/je-marketing-(102).html

This gown comes with a sweater over that can be taken off if it will get too scorching.

https://digi607sa.z44.web.core.windows.net/research/digi607sa-(419).html

Stick to a small yet stately earring and a cocktail ring, and maintain additional sparkle to a minimum.

https://digi59sa.ams3.digitaloceanspaces.com/research/digi59sa-(408).html

Montage by Mon Cheri designer Ivonne Dome designs this special occasion line with the delicate, fashion-forward mom in mind.

https://storage.googleapis.com/digi461sa/research/digi461sa-(281).html

Weddings are very particular days not only for brides and grooms, but for their moms and grandmothers, too.

https://storage.googleapis.com/digi474sa/research/digi474sa-(149).html

However, the graphic styling of the flowers offers the dress a modern look.

https://storage.googleapis.com/digi465sa/research/digi465sa-(400).html

That said, having such all kinds of choices would possibly really feel somewhat overwhelming.

https://storage.googleapis.com/digi471sa/research/digi471sa-(358).html

If you want your legs, you could wish to go together with an above-the-knee or just-below-the-knee dress.

https://digi73-1sa.sfo3.digitaloceanspaces.com/research/digi73sa-(369).html

The following are some issues to contemplate when choosing between clothes.

https://je-tall-marketing-767.sgp1.digitaloceanspaces.com/research/je-marketing-(15).html

In brief, sure, but only if it goes with the feel of the day.

https://je-tall-marketing-806.syd1.digitaloceanspaces.com/research/je-marketing-(384).html

Check out the information for excellent suggestions and ideas, and get ready to cut a splash at your daughter’s D-day.

https://digi64sa.sfo3.digitaloceanspaces.com/research/digi64sa-(481).html

Use these as statement items, maybe in a brighter color than the rest of the outfit.

https://storage.googleapis.com/digi477sa/research/digi477sa-(244).html

Dresses are made in stunning colors such as gold, purple, and blue and mother of the bride attire.

https://objectstorage.ap-tokyo-1.oraclecloud.com/n/nrswdvazxa8j/b/digi506sa/o/research/digi506sa-(191).html

For a mom, watching your daughter stroll down the aisle and marry the love of her life is an unforgettable moment.

https://digi51sa.ams3.digitaloceanspaces.com/research/digi51sa-(237).html

Moms who wish to give a little drama, consider vivid hues and statement options.

https://digi599sa.z29.web.core.windows.net/research/digi599sa-(262).html

The beaded flowers down one side add a tactile contact of luxury to the column costume .

https://storage.googleapis.com/digi467sa/research/digi467sa-(263).html

For the mother who likes to look put collectively and trendy, a jumpsuit in slate grey is bound to wow.

https://storage.googleapis.com/digi473sa/research/digi473sa-(107).html

This off-the-shoulder fashion would look great with a pair of strappy stilettos and shoulder-duster earrings.

https://je-tall-marketing-789.tor1.digitaloceanspaces.com/research/je-marketing-(49).html

For her, that included a couture Karen Sabag ball gown match for a princess.

https://objectstorage.ap-tokyo-1.oraclecloud.com/n/nrswdvazxa8j/b/digi502sa/o/research/digi502sa-(19).html

You’ve helped her discover her dream dress, now allow us to allow you to find yours…

https://digi605sa.z12.web.core.windows.net/research/digi605sa-(407).html

Stylish blue navy costume with floral sample lace and great silk lining, three-quarter sleeve.

https://digi659sa.netlify.app/research/digi659sa-(226)

A mother is a ray of shine in a daughter’s life, and so she deserves to get all glitzy and gleamy in a sequin MOB gown.

https://digi657sa.netlify.app/research/digi657sa-(287)

Don’t be concerned with having every thing match completely.

https://je-tall-marketing-765.blr1.digitaloceanspaces.com/research/je-marketing-(165).html

Much like the mother of the groom, step-mothers of both the bride or groom should observe the lead of the mother of the bride.

https://je-tall-marketing-770.blr1.digitaloceanspaces.com/research/je-marketing-(374).html

As the mother of the bride, your position comes with big responsibilities.

https://je-sf-tall-marketing-712.b-cdn.net/research/je-marketing-(364).html

Pair the dress with impartial or metallic equipment to keep the the rest of the look subtle and easy.

https://digi70sa.sfo3.digitaloceanspaces.com/research/digi30sa-(500).html

From impartial off-white numbers to daring, punchy, and fashion-forward designs, there’s one thing right here that may swimsuit her fancy.

https://storage.googleapis.com/digi460sa/research/digi460sa-(308).html

Kay Unger’s maxi romper combines the look of a maxi costume with pants.

https://digi611sa.z28.web.core.windows.net/research/digi611sa-(349).html

Make it pop with a blinged-out pair of heels and matching equipment.

https://storage.googleapis.com/digi474sa/research/digi474sa-(198).html

Another can’t-miss palettes for mother of the bride or mother of the groom dresses?

https://objectstorage.ap-tokyo-1.oraclecloud.com/n/nrswdvazxa8j/b/digi510sa/o/research/digi510sa-(284).html

This mother’s knee-length patterned dress completely matched the temper of her kid’s outdoor wedding ceremony venue.

https://objectstorage.ap-tokyo-1.oraclecloud.com/n/nrswdvazxa8j/b/digi511sa/o/research/digi511sa-(136).html

Floral prints and gentle colours play nicely with decor that’s sure to embrace the blooms of the spring and summer time months.

https://digi77sa.sfo3.digitaloceanspaces.com/research/digi77sa-(207).html

There often aren’t any set rules in phrases of MOB outfits for the wedding.

https://je-tall-marketing-764.sgp1.digitaloceanspaces.com/research/je-marketing-(347).html

It’s usually common follow to avoid wearing white, ivory or cream.

https://objectstorage.ap-tokyo-1.oraclecloud.com/n/nrswdvazxa8j/b/digi509sa/o/research/digi509sa-(490).html

For mothers who swoon for all things sassy, the dramatic gold mother of the bride costume could be the picture-perfect decide in 2022.

https://je-tall-marketing-821.syd1.digitaloceanspaces.com/research/je-marketing-(91).html

This glittery lace-knit two-piece includes a sleeveless cocktail gown and coordinating longline jacket.

https://digi76sa.sfo3.digitaloceanspaces.com/research/digi76sa-(241).html

This mother of the bride outfit channels pure femininity.

https://je-tall-marketing-823.tor1.digitaloceanspaces.com/research/je-marketing-(500).html

At as soon as effortless and refined, this beautifully draped robe is the perfect hue for a fall marriage ceremony.

https://je-tall-marketing-803.lon1.digitaloceanspaces.com/research/je-marketing-(254).html

The finest mother of the bride dresses fill you with confidence on the day and are comfortable sufficient to wear all day and into the evening.

https://je-tall-marketing-795.lon1.digitaloceanspaces.com/research/je-marketing-(307).html

This desert colored gown is ideal if what the bride desires is so that you can put on a colour closer to white.

https://digi607sa.z44.web.core.windows.net/research/digi607sa-(474).html

An alternative is to combine black with one other colour, which may look very chic.

https://je-tall-marketing-787.lon1.digitaloceanspaces.com/research/je-marketing-(375).html

Jovani Plus measurement mother of the bride attire fits any body kind.

https://objectstorage.ap-tokyo-1.oraclecloud.com/n/nrswdvazxa8j/b/digi499sa/o/research/digi499sa-(496).html

The flowy silhouette and flutter sleeves hit the proper playful observe for spring and summer season celebrations.

https://digi62sa.sfo3.digitaloceanspaces.com/research/digi62sa-(493).html

Tadashi Shoji is a good name to look out for when you’re on the hunt for a designer gown.

https://storage.googleapis.com/digi477sa/research/digi477sa-(340).html

A fit-and-flare silhouette will accentuate your figure but still feel light and ethereal.

https://je-sf-tall-marketing-722.b-cdn.net/research/je-marketing-(253).html

If you like impartial tones, gold and silver clothes are promising selections for an MOB!

https://storage.googleapis.com/digi469sa/research/digi469sa-(39).html

Make certain you’re both carrying the identical formality of costume as properly.

https://je-tall-marketing-822.sgp1.digitaloceanspaces.com/research/je-marketing-(333).html

Plus, a blouson sleeve and a ruched neckline add to this silhouette’s romantic vibe.

https://objectstorage.ap-tokyo-1.oraclecloud.com/n/nrswdvazxa8j/b/digi503sa/o/research/digi503sa-(127).html

Similar to the mothers of the bride and groom, the grandmothers might want to coordinate with the marriage party.

https://jekyll.s3.us-east-005.backblazeb2.com/20250729-1/research/je-marketing-(83).html

For her, that included a couture Karen Sabag ball gown fit for a princess.

https://jekyll.s3.us-east-005.backblazeb2.com/20250730-7/research/je-marketing-(217).html

This combination is especially great for summer time weddings.

https://je-tall-marketing-772.sgp1.digitaloceanspaces.com/research/je-marketing-(167).html

Discover one of the best wedding visitor outfits for women and men for all seasons.

https://digi660sa.netlify.app/research/digi660sa-(165)

Go for prints that talk to your marriage ceremony location, and most significantly, her private fashion.

https://je-tall-marketing-780.blr1.digitaloceanspaces.com/research/je-marketing-(399).html

From neutral off-white numbers to bold, punchy, and fashion-forward designs, there’s one thing here that can swimsuit her fancy.

https://storage.googleapis.com/digi462sa/research/digi462sa-(268).html

Take inspiration from the bridesmaid attire and communicate to your daughter to get some ideas on colors that will work nicely on the day.

https://digi73-1sa.sfo3.digitaloceanspaces.com/research/digi73sa-(392).html

So long as you’ve got obtained the soonlyweds’ approval, there’s absolutely nothing incorrect with an allover sequin robe.

https://digi64sa.sfo3.digitaloceanspaces.com/research/digi64sa-(280).html

One reviewer mentioned they wore a white jacket excessive however you would additionally select a wrap or bolero.

https://objectstorage.ap-tokyo-1.oraclecloud.com/n/nrswdvazxa8j/b/digi511sa/o/research/digi511sa-(105).html

We even have tea-length dresses and long dresses to go properly with any season, venue or preference.