Performance with Oracle GoldenGate

Recently I have been talking with customers about their Oracle GoldenGate implementations and how these implementations can benefit their enterprise architecture. During these discussions, one thing that sticks out is the need for performance, performance tuning, and how that actually correlates to what is happening in the database. There seems to be a bit of misunderstanding when it comes to this topic. Let’s try and explain that here!

Over the years, I have looked into this topic multiple times and have concluded that Oracle GoldenGate performance is directly tied to the performance of the database which it runs against. At the same time, there are settings that can be adjusted at the Oracle GoldenGate layer to help improve performance based on the data and how that data is to be captured and applied. In giving this some thought, I have come up with a simple yet interesting formula that helps explain how Oracle GoldenGate can help organizations capitalize on their investment of this “plumbing” product.

The basis of the formula is Time. Basically, how much data can be captured/applied in the smallest amount of time?

In general, Oracle GoldenGate does a “capture” of the data from a source data store and then “applies” this data to a target data store. Depending on the flavor of Oracle GoldenGate the information that is captured is either general information from the redo logs (checkpoint markers) or SQL statements from the transaction logs (older versions of Oracle GoldenGate will also pull the SQL statements). The speed at which this “capture” and “apply” process is done plus the time the database has to wait for access for data will be the determining factoring of how much time it takes to move data. Remember, that Oracle GoldenGate operates on commits. A commit has to happen before data is actually moved. Meaning, the amount of time it takes to commit on both sides has a direct correlation to performance.

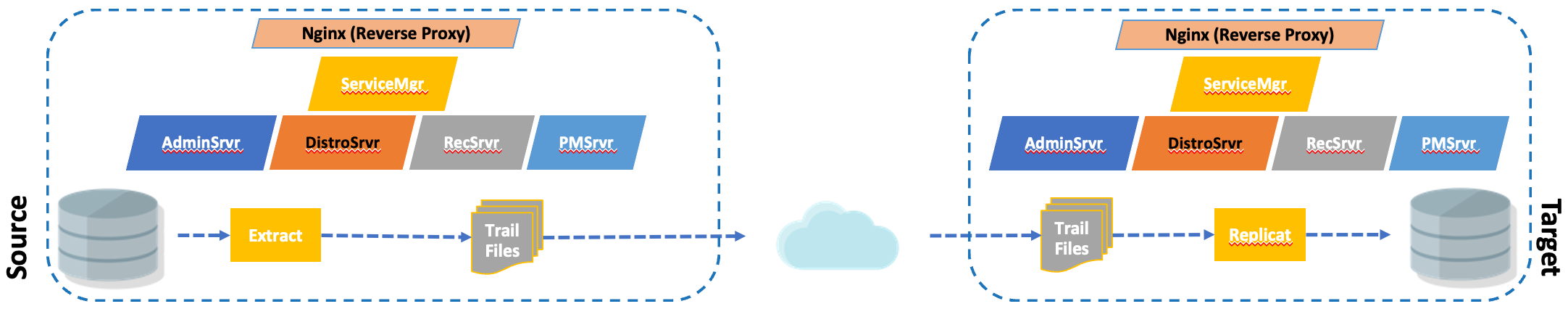

To illustrate, a basic understanding of how Oracle GoldenGate works is needed. The general flow of how Oracle GoldenGate works is illustrated in the image below:

An extract will capture data from either the online redo logs or a transaction log when a commit happens. Then the transaction is retained in a trail file, shipped across the network, and then read and applied on the target. Where the replicat will acknowledge the apply when a commit is processed.

To dive a bit deeper and further understand how this is related to performance, a detail review of the Integrated Extract and Intergrated Replicat are below. The Integrated processes are used for the Oracle platform. For heterogenous platforms, similar concepts are used, but not exact.

Extract

The “capture” process is also know as an Extract. For many Oracle implementations, a single extract is all that is needed to pull high volumes of data. The extract process, within an Oracle context, is pretty efficient. Oracle never releases benchmark numbers and this is why people think they may need more than one extract. The only time you need more than one extract is when you want to break up schemas for business reasons. As already mentioned, a single Integrated Extract will perform well in most use-cases.

Starting with Oracle GoldenGate 18c (for Oracle), what is termed as “Classic Extract” has been deprecated and removed as of Oracle GoldenGate 21c. For other versions of Oracle GoldenGate (for non-Oracle, Big Data, and Mainframe), the “Classic Extract” still exists. This means for all Oracle implemenations, the “Integrated Extract” has to be used. By using the “Integrated Extract”, you are directly tieing the capture processes to the Oracle Database and the LogMiner process. Resulting in all transactions being pulled from the online redo logs, unless a checkpoint is found back in the archive logs. In that case, the extract will mine the archive logs and catch up to where it needs to in the redo logs.

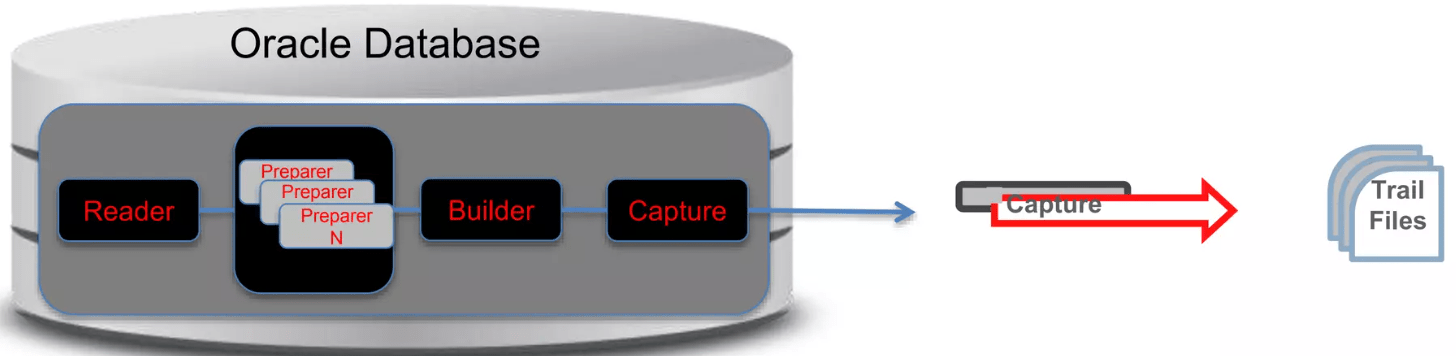

The Integrated Extract is built to be efficient and ensure transactions are captured in transactional order. The internals of the Integrated Extract can be conceptualized in the below image:

The breakdown of the Extract is as follows (left to right):

- Reader – Reads logfile/redo logs and splits transactions into regions

- Preparer – Scans regions of logfiles/redo logs and pre-filters based on parameters

- Builder – Merges prepared records in System Change Number (SCN) order

- Capture – Formats Logical Change Record (LCR) and passes to GG Extract

Once a commit is performed in the Oracle Database, the transactions are read from the online redo logs, prepared, built in transactional order, then actually captured. Once “captured”, the transactions are stored in the trail file. At this point the trail file is shipped across the network to the target location.

With the integrated extract being pretty efficient, how can we gage or increase performance of the process?

Extract Performance

For an extract to perform as expected, the Oracle Database needs to be configured to support the integrated process. This means that items like memory and waits need to be address. The first areas that need to be tuned are memory related:

Memory (Oracle related)

The memory that the integrated extract requires is tied into the System Global Area (SGA). Each extract that is configured against an Oracle Database requires 1.25G of memory in the Streams Pool (stream_pool_size). This setting is often over looked and customers think they need more than one extract. At the same time, if you need more than one extract, you’ll have to allocate 1.25G of memory per extract.

Waits (Oracle related)

Waits are a different aspect of Oracle GoldenGate performance. Oracle GoldenGate operates on the premises of commit-to-commit. Meaning, any transaction that is open and not committed will not be replicated. This type of issue is typically seen in developers leaving transactions open and walking away or batch process trying to process large amounts of data without a commit. Both lead to possible wait issues. At the same time, commits can cause issues with waits as well. If a system is configured with control files on different I/O locations (non-ASM), “concurrency” waits can cause huge spikes in performance issues with Oracle GoldenGate. The way to remedy this is to ensure that the application or batch processes are committing frequently.

Extract Recommendations

For extracts, our recommendations are the following:

- For each extract, allocate a minimum of 1.5G of memory in the Streams Pool

- Ensure that applications are committing frequently, based on application needs

- If using batch processing, increase the frequency of committing

Replicat

On the other side of the configuration is the replicat. Replicats are used to apply the transactions in a transactional order. Depending on the volume of data, there are different types of replicats that can be used. In total there are five different replicats:

- Classic Replicat

- Coordinated Replicat

- Integrated Replicat

- Non-Integrated Parallel Replicat

- Integrated Parallel Replicat

Each version of the replicat has it benefits and use-case which it can be leveraged for. In newer implementations of Oracle GoldenGate, it is recommended to use a Parallel Replicat. For practical purposes with Oracle Database, the Integrated Replicat should be used. The other versions of the replicat should be used on a case-by-case determined by the volume of data being processed.

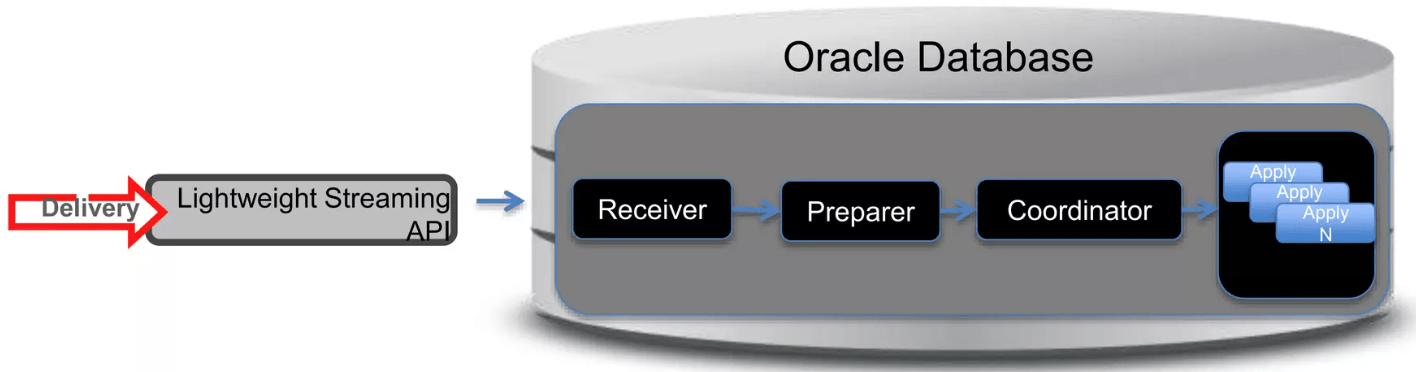

The Replicat is actually made up of a few different components. These components are:

- Replicat (a lightweight streaming api)

- Inbound Server (multiple components)

The Inbound Server is made up of four (4) different pieces that enable the apply process. The pieces are:

- Receiver – reads the logical change record (LCR) from the trail file

- Preparer – computes the dependancies between transactions (PK, FK, UK)

- Coordinator – maintains the order between transactions

- Apply – applies the transactions in order including conflict, detection, and resolution (CDR) and error handling

Replicat Performance

Similar to the extract process, the performance of the replicat relies on the performance of the database. This means items like memory and waits can have an affect on the performance. The first area that needs to be addressed for performance tuning is memory.

Memory (Oracle related)

The memory that an integrated replicat requires is tied to the System Global Area (SGA). With each integrated replicat configured against an Oracle Database, the process requires 1.25G of memory in the Streams Pool (stream_pool_size). This allows the for the transactions to be funneled into the streaming api and cached. Then the receiver process can read the LCRs quickly, enabling the preparer and coordinator to put the transactions in order. Once the transactions are in order, the apply process can apply the transactions to the database. If needed the apply process can be scaled based on the parallelism settings in the database. All this is coordinated and done within the memory allocation defined with the SGA and streams pool.

Waits (Oracle related)

Similar to the extract process, waits within the Oracle Database can have an impact on the performance of Oracle GoldenGate. The biggest concern that can cause a wait for Oracle GoldenGate is the timing on which commits happen. If a commit is not happening frequently, then the corresponding lag will increase. Similar if a commit is happening so soon, lag will not show any performance issue but you may get concurrency waits due to control file writes. The commit frequency of the the application, by business defitions, will have a direct impact on the performance of both the Oracle Database and Oracle GoldenGate.

GoldenGate Parameters

There are a few Oracle GoldenGate parameters that can help with either identifying performance related issues or ensure a level of performance within the replication stream. These parameters have been broken down into they processes that they support.

Extract Parameters

- LOGALLSUPCOLS – instructs the extract to write all supplemental log columns to the trail file

- UPDATERECORDFORMAT – writes a single LCR that contains both the before and after images of the transaction. Setting this to COMPACT will reduce the amount of data in the trail file.

- PARALLELISM – controls the number of prepares that are used to process online redo logs.

- MAX_SGA_SIZE – controls the amount of memory configured per extract. Typically set to 1.5G per process

Replicat Parameters

- COMMIT_SERIALIZATION – used to define how transactions are ordered. Default is DEPENDENT_TRANSACTIONS. Set to FULL if needing source commit order.

- EAGER_SIZE – Threshold to begin applying large transactions (9500 (default)). Serializes apply process.

- MAX_SGA_SIZE – controls memory resources for Integrated Replicat. Defaults to INFINTE

- PARALLELISM – controls number of appliers (defaultL 4)

- MAX_PARALLELISM – controls max number of appliers.

Note: MAX_PARALLELISM = PARALLELISM, disables auto tuning of replicat

Results

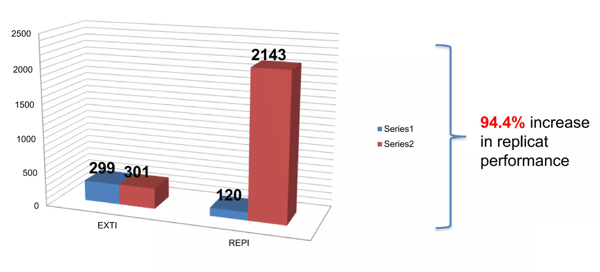

By understanding what the Integrated Extract and the Integrated Replcat processes do, I can quickly identify ways to increase performance. Using the basic information outlined, I were able to product a 94% increase in performance on small Dell T110 (i3) machines. This was a remarkable increase in performance per minute.

If you are looking for more information on how to turn your Oracle GoldenGate or Oracle Database; contact us at [email protected].

Bobby Curtis

I’m Bobby Curtis and I’m just your normal average guy who has been working in the technology field for awhile (started when I was 18 with the US Army). The goal of this blog has changed a bit over the years. Initially, it was a general blog where I wrote thoughts down. Then it changed to focus on the Oracle Database, Oracle Enterprise Manager, and eventually Oracle GoldenGate.

If you want to follow me on a more timely manner, I can be followed on twitter at @dbasolved or on LinkedIn under “Bobby Curtis MBA”.

Can you be more specific about the content of your article? After reading it, I still have some doubts. Hope you can help me.

Your article helped me a lot, is there any more related content? Thanks!

det. Denne side har bestemt alle de oplysninger, jeg ønskede om dette emne, og vidste ikke, hvem jeg skulle spørge. Dette er min 1. kommentar her, så jeg ville bare give en hurtig

Hey very nice blog!

Introducing to you the most prestigious online entertainment address today. Visit now to experience now!

Hey! Quick question that’s entirely off topic. Do you know how to make your site mobile friendly? My website looks weird when viewing from my apple iphone. I’m trying to find a theme or plugin that might be able to correct this issue. If you have any recommendations, please share. Many thanks!

Your article helped me a lot, is there any more related content? Thanks!

Wow, this post is fastidious, my younger sister is analyzing these things, so Iam going to convey her.